deobfuscate: AI & ML

Bytes and Bots and LLMs, Oh My!

I took quite some time to write this post because I didn’t believe myself nor the industry was ready for the conversation when AI and ML started becoming a topic of discussion roughly around 2022; two-ish years ago. I felt this way primarily because it was too new and fresh, and there were simply too many topics to discuss about its power and usages; I wanted it to be a bit more understood and standardized before trying to approach it. Since then, everyone and their mother has a topic piece on how AI and ML will come to save the day and will sprinkle it into their marketing however possible.

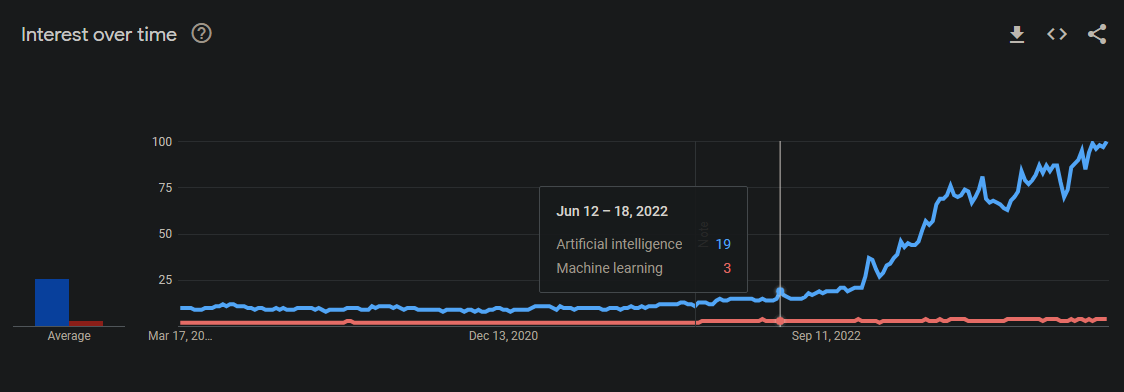

Google Trends Data on Artificial Intelligence and Machine Learning, with the first big spike around June, 2022

Despite all of this, the study of AI has been around for decades, and we’ve already been using it in a few capacities without knowing it. So what I’m aiming to do here is break through the noise and break down how these two absolutely amazing and powerful concepts can bring the SOC a new force multiplier, unlike anything we’ve had in the last decade in terms of security solutions.

Artificial Intelligence vs Machine Learning

The first thing that needs to be done is explain what the heck these concepts even are:

Artificial Intelligence, or AI, is the broader concept of machines being able to carry out tasks in a way that we would consider "smart." It's a vast field aiming to mimic human cognitive functions. Think of AI as the aspiration to craft a digital brain - not just any brain, but one that could potentially outsmart us in many tasks or, at the very least, out-compute us. AI is the grand umbrella under which various technologies, including ML, reside. It's like the goal of creating a master chef. The chef doesn't just cook; they understand flavors, plan menus and adapt to new culinary challenges. In the digital world, AI is that chef, striving to understand, plan, and adjust.

Machine Learning, on the other hand, is a subset of AI. It's the "learning" part of AI, where machines improve their performance on specific tasks over time without being explicitly programmed. It's like teaching our chef to perfect a recipe through trial and error. ML algorithms use statistical techniques to enable machines to improve tasks with experience. ML is the secret sauce powering most of the AI advancements we're witnessing. ML is behind the scenes, learning and evolving from image recognition in social media to anomaly detection in cybersecurity.

Since AI itself is the broader topic, I will focus on it for the remainder of our discussion and will poke into the learning aspects as they become relevant later.

Deepening Explanations

The road doesn’t stop there. AIs can be broken into various types and categorizations. For instance:

Narrow vs General: These two types, also referred to as Weak and Strong, respectively, focus on the amount of tasks being performed. Narrow focus on a single or just a handful of closely related tasks. Don’t let the name fool you; these kinds of AIs perform at an incredible sophistication given their minimal scope and make up the AIs we interact with on a daily basis. General, however, is still a hypothetical form of AI and is considered a state in which an AI becomes close to human consciousness. It can learn and apply its intelligence dynamically and broadly, similar to humans.

Limited Memory vs Reactive Machines: Most AI currently reside in the limited memory category, such as self-driving cars and LLM chatbots like ChatGPT. These provide the capability to use historical data to make decisions. Meanwhile, reactive machines are trained to respond to specific situations or inputs but can’t ‘form’ or use memories/historical data. This would put AI like IBM’s Deep Blue Chess AI into this category.

In the future, current AI theory predicts that we will have other types of AI. One of them is the Theory of Mind, a concept where AI can understand human emotions, beliefs, and behaviors. Even further, the eventuality of Self-aware AI, where they would develop their sense of consciousness and identity.

Current Applications of AI

AI is trending in every industry, which isn’t surprising. The amount of uplift logical processes can perform when it is even mildly intelligent is staggering. For example, here is a non-exhaustive list:

Source: https://www.reddit.com/r/memes/comments/13dwhid/ai_is_everywhere_these_days/

Healthcare and Medicine:

Disease Diagnosis: AI algorithms help diagnose diseases, including cancer, more accurately and quickly than traditional methods.

Drug Discovery and Development: AI speeds up the drug discovery process and helps predict the success rate of drugs.

Personalized Medicine: Tailoring treatment plans for individuals based on their genetic makeup and lifestyle.

Business and Industry:

Customer Service: AI-powered chatbots and virtual assistants provide 24/7 customer support and personalized interaction.

Supply Chain Management: AI optimizes supply chains, predicting demand and managing inventory more efficiently.

Predictive Analytics: Used for market analysis, consumer behavior prediction, and risk assessment.

Finance:

Algorithmic Trading: AI systems analyze market trends and execute trades at a speed and volume beyond human capability.

Fraud Detection: Identifying unusual patterns to prevent fraudulent transactions.

Credit Scoring: Automated and more accurate credit scoring systems.

Transportation:

Autonomous Vehicles: Self-driving cars and drones use AI for navigation and decision-making.

Traffic Management: AI algorithms optimize traffic flow, reducing congestion and improving safety.

Education:

Personalized Learning: AI tailors educational content to individual students' learning styles and pace.

Automation of Administrative Tasks: Grading and handling of administrative paperwork.

Entertainment and Media:

Content Recommendation: AI powers recommendation engines on platforms like Netflix, YouTube, and Spotify.

Game Development: Creating more realistic and responsive gaming environments.

Retail:

Personalized Shopping Experience: AI offers customized shopping recommendations and improves customer service.

Inventory Management: Predictive analytics for maintaining optimal stock levels.

Security and Surveillance:

Facial Recognition: Used for security purposes, though it raises privacy concerns.

Cybersecurity: AI detects and responds to cyber threats more efficiently than traditional methods.

Research and Space Exploration:

Data Analysis: Handling and interpreting vast amounts of data from research studies or space missions.

Robotics: Deployed in space missions for exploration and performing tasks in environments hostile to humans.

Artificial Creativity:

Art and Music Generation: AI algorithms create art and music, pushing the boundaries of creativity.

Agriculture:

Crop Monitoring and Analysis: AI helps monitor crop health and optimize farm management.

Language Processing:

Translation Services: Real-time language translation and interpretation.

The Double Edge Sword

With all these examples in mind, it is easy to see how these capabilities will change our operations forever. However, some of you are keen enough to spot the growing issue: AI is a technology just the same as anything else, and as much as we can use it to help us, it is another system we also have to safeguard at the same time, which in turn adds to the complexity. AI will be both a blessing and a curse as we must adapt to a new way of data processing in which AIs can manipulate but also be manipulated. It's still low-complexity to get LLMs like ChatGPT or Gemini to give me things that would generally violate its policy, such as a type of attack called prompt injection.

We have seen prompt injection work in various ways, from tricking support bots powered by AI to guarantee services and refunds to robocaller AIs that get tricked into feeding you chocolate fudge recipes because you asked nicely. This will lead businesses to be careful in their implementation, primarily starting with internal tools that gently nudge their way into their external products and services.

Likewise, while not having an AI might save you from prompt injection, AIs give threat actors much broader capabilities. Many attackers can circumvent censors and policy blocks simply by running a local AI instance, like Llama and the hundreds of other models you can download and run locally on a system. These can help power attackers by generating custom code, supporting automation efforts, and even supporting dynamic decision-making and processing as they move through phases of recon, emulation, exploitation, etc. This capability keeps me up at night more than the liability and legal risks of having our own AI.

A final issue to touch on with machine learning is the issue known as ‘drift.’ As you train an AI, the model adjusts predictions based on variable statistical properties. While generally this is good, bad can occur typically due to changes in the data the model analyzes, where the original dataset no longer matches the new dataset. It can also happen when the technology changes, such as how data is collected, new data sources are introduced, or changes in how the model processes the data. As a byproduct of this, models can actually become less accurate and relevant over time. This concept is quintessentially the idea that too much studying crunch before the test can actually harm you more than help you. ML Drift is a complicated topic and is still being studied by AI engineers, but there are mitigations in place, such as using Human Supervised Models, using feedback loops like Human-In-The Loop or Automated Feedback that allow the AI to be ‘fact-checked’ or fact-check itself, and ensuring that adaptation strategies are used when adopting new data into the model.

Using AI to Our Advantage

I didn’t write this to be doom-and-gloom. The best counter to threat actors leveraging AI is having our own, plain and simple. We can do this by implementing tools with AI prebuilt in:

AI in your E/XDR that is powered by ML models that go off your organization’s data can generate a unique ‘fingerprint’ of data flow just for you that allows it to be more accurate and thorough in its analysis of behaviors within an environment. This makes behavioral analytics very powerful, as it can continuously keep a running memory for a baseline and doesn’t constantly require retraining or manual baselining. Exceptional models are supervised to prevent drift and are trained on a ‘running’ time of reference rather than a static one.

AI in your next-gen firewalls can monitor for abnormal behavior or behavior that falls outside of baseline. It can also conduct dynamic risk scoring and rule weights based on the activities it spots and determine when something is too strange to be typical. Likewise, using this with limited memory and ML trained on your connections makes ID/PS bypasses much more difficult as there is a level of ‘digital awareness’ now on your network (Skynet, anyone?).

AI assistants in tools can help guide incident response activities, which can be especially useful for junior folks who need guidance but don’t have immediate access to more senior folks. Even better, those same assistants could support troubleshooting and system restoration efforts if that tool is experiencing issues, helping system admins. The most powerful ones will engage with content development too, helping security engineers build detections and optimize the tool’s capabilities, maximizing returns on that investment.

AI in sandboxing capabilities can strip down and analyze software at an insanely rapid pace and perform it in a way that allows it to build a working memory of samples. Powerful ones will use this knowledge actually to begin profiling authors and can help assist in attribution, especially when the same author is targeting your organization. Believe it or not, this is one of the oldest AI use cases in security, as sandbox AI models have been around for quite some time.

As cool as Geralt is, this isn’t how all Threat Intel should be treated

AI in threat intelligence can onboard data streams and feeds from various vendors but break apart the noise to allow organizations to focus on datasets that matter to their particular industry and align to the organization's risks rather than broadly assuming ‘everything bad should be treated equally.’ Furthermore, they can work with your various tools to build a better operational and strategic understanding of threats and how they apply to you to create control guidance that fits the organization.

AI built into case management systems can use predictive modeling to understand when similar attacks are likely associated in some capacity. Likewise, they can help root out false positives before they reach triage analysts. They can also pair with the tools feeding them data to build robust understandings of incident response, assist in escalations, and reach out to other teams when necessary. AI can also automatically consolidate reporting efforts, making metrics far more approachable and less of a manual task.

There are a variety of additional capabilities that organizations can use AI for, and this merely scratches the surface. The future of AI will allow us to run predictive models and execute simulated attacks, coordinating a Purple Team effort that is nearly end-to-end automated by the machine and supervised by Red and Blue team experts. I predict this capability level is only a few years away from realization and could be tremendous in lowering the barrier to entry when doing more advanced/high maturity level testing and auditing against our controls.

A final thought about it is to think of it this way. Our current approach to security tooling typically involves a lot of detection engineering, basically statistical math with a splash of technical understanding of the logging sources. However, one thing we aren’t capable of doing well is dealing with an ever-expanding amount of data sources and variances. As our data becomes more generalized (filled with various sources), so too do our detections. While we can get granular at writing based on a handful of logging sources, writing detections that can run off a multitude of sources means we lose context or face the wrath of false positives. AI allows us to circumvent this issue as it can have profound, intricate knowledge of every data source and process it unlike any human mind could dream of. In reality, we can’t create the hundreds or thousands of detections needed to catch behaviors; instead, we need models that can perform these tasks to scale with the vast amounts of data we must deal with on a day-to-day basis.

Conclusions

Well, there are a lot of things to extrapolate from this post. AI is powerful, both in our hands and the hands of the threat actors who are looking to gain an advantage over us. In my opinion, we should be seeking efforts to bolster our defenses with this technology despite the risks of the ‘early adopter’ tax that plagues being on the bleeding edge of information technology. Attackers are already using AI now, and if we don’t jump on this effort now, organizations risk being much further behind than likely any technology-advantage gap we’ve seen in quite some time, which makes them very easy targets.

I also want to stress that some of this article is a gross simplification of AI and machine learning. Despite my best efforts and experience, I’m not an expert in this field; however, working with AI technologies for a few years has given me good insight into how they are developed and how powerful they can be. I highly suggest reading more into their capabilities. Likewise, anytime you consider adding a technology that features AI, you should do a lot of due diligence to ascertain precisely how it operates and what functions it performs.

Enjoy reading our content? Consider Sharing this post and Supporting Us!